How trustworthy are this year’s presidential polls? On Monday, November 5, will they be able to tell us who is likely to win the election? We’ll know soon enough, but in the meantime the historical record provides some important context. This record suggests three things:

1) The polls have been fairly accurate. (Adverbs are always a bit subjective, so see what you think after you read the post.)

2) To the extent that they miss, they do so by over-estimating the frontunner’s vote.

3) The reason they miss is not because of late movement among the undecideds but because of “no-show” voters who tells pollsters that they will vote but then don’t.

To show this, I will again draw on Robert Erikson and Christopher Wlezien’s Timeline of Presidential Elections. Their data include all live-interviewer presidential election trial heat polls from 1952-2008.

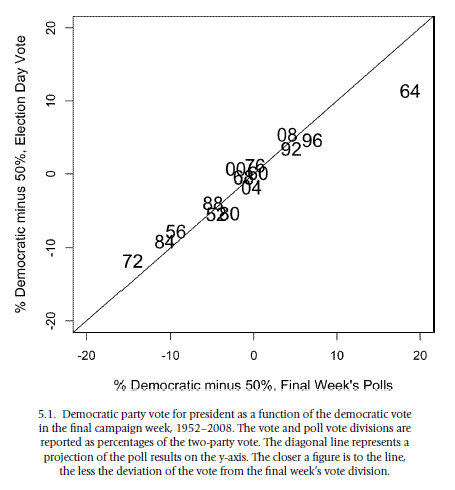

Below is their graph of the Democratic candidate’s poll margin plotted against the Democratic candidate’s margin in the actual two-party vote.

Clearly, the polls are very close to the actual outcome. Erikson and Wlezien write:

From the figure we can see that the polls at the end of the campaign are a good predictor of the vote—the correlation between the two is a near-perfect 0.98. Still, we see considerable shrinkage of the lead between the final week’s polls and the vote. The largest gap between the final polls and the vote is in 1964, when the polls suggested something approaching a 70–30 spread in the vote percentage (+20 on the vote −50 scale) for Johnson over Goldwater, whereas the actual spread was only 61–39.

In very close elections, the polls are still quite close to the actual outcome—missing by 1-2 points at most. They slightly underestimated Gore’s share of the vote, for example. Of course, in a close election, 1-2 points is consequential. But it’s not reasonable to expect polls to call very close elections right on the nose, and small misses shouldn’t be taken as evidence for this astounding claim from former Secretary of Labor Robert Reich:

Pollsters know nothing. Their predictions are based on nothing. They exist solely to make astrologers and economic forecasters look good.

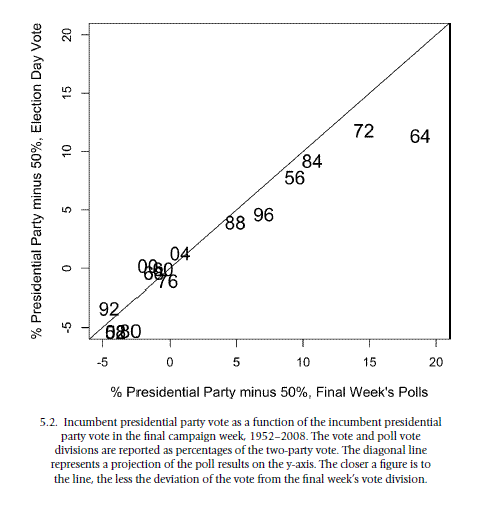

To the extent that polls “miss,” they tend to overestimate the share that the frontrunner will receive. A similar graph from Erikson and Wlezien shows this:

This is not because of a pro-incumbent bias in the polls. It is because the front-running candidate typically does a bit worse on Election Day than in the final polls, and that candidate is typically the incumbent:

This figure shows a clear tendency for the late polls to exaggerate the support for the presidential party candidate; that is, most of the observations are below the line of identity relating the polls and the vote. From the figure it also is clear that the underlying reason appears to be that leads shrink for whichever candidate is in the lead (usually the incumbent party candidate) rather than that voters trend specifically against the incumbent party candidate. When the final polls are close to 50–50, there is no evident inflation of incumbent party support in the polls.

Another observation is, again, that the largest “misses” are typically in the order of a 1-2 points (with the exception of 1964) and rarely suggest a different candidate will win than actually won (this appears to have happened only in 2000). Ultimately, across these 15 elections, Erikson and Wlezien find no evidence of systematic bias, either for or against the incumbent’s party or one of the major parties.

In short, the polls are pretty good. Obviously, this judgment is somewhat in the eye of the beholder, but I think they deserve more credit than they often get. For example, I would not quite agree with Jay Cost’s assessment (here, here) that the polls “screwed the pooch” in 1968, 1976, 1980, 1992, 1996, 2000, and 2004. Granted, Erikson and Wlezien are simplifying here by looking only at the two-party vote, thereby ignoring third-party candidates (e.g., in 1968, 1980, 1992, and 1996). But the two-party vote is still a useful diagnostic, particularly in a year like 2012 where there is no salient third-party candidate.

Finally, as I mentioned previously, Erikson and Wlezien investigate why the frontrunner in the polls tends to do slightly worse on Election Day. This is mainly not about late movement among the undecideds but about “no-shows” who simply fail to vote:

Relatively few voters switch their vote choice late in the campaign. Relatively few undecided voters during the campaign end up voting, and those who do split close to 50–50. Moreover, there is no evidence that partisans “come home” to their party on Election Day (although this phenomenon occurs earlier in the campaign)….[O]ne additional factor…explains why late leads shrink. Many survey respondents tell pollsters they will vote but then do not show up. These eventual no-shows tend to favor the winning candidate when interviewed before the election. Without the preferences of the no-shows in the actual vote count, the winning candidate’s lead in the polls flattens.

Given the state of the national polls right now—a virtual tie—the historical record suggests that they will be very close to the eventual outcome, but could be off by 1 or even 2 points, depending on the vicissitudes of turnout. Given the historical lack of systematic bias toward either party or the incumbent party in close elections, it’s difficult to predict which “direction” the polls might miss this year, if they miss. The national polls tend to overestimate the frontrunner’s vote share, but with the national polls tied, there is no clear national frontrunner at the moment.

If this same pattern occurs in the state polls, then the battleground state outcomes should be narrower than the polls reflect—movement which might mitigate Obama’s apparent lead in Ohio an Romney’s apparent lead in Florida, for example. None of this leads to a dramatically different conclusion than you’ve been reading—the election will be close, turnout matters, etc. But I don’t think discrepancies between the polls and the outcome, in the past or in 2012, reflect or will reflect a massive failure by the polls—what we might call a”pooch-screwing”?—only the inevitable challenges that arise when using a very useful but imperfect instrument to predict the future.

Leave a Reply