The answers to “How much should people sacrifice today for the benefit of those living several decades from now?” vary widely. This column suggests that people’s distaste for uncertainty – ambiguity aversion – favours immediate, rapid cuts in greenhouse gas emissions.

Most economists now agree on the need to control emissions of greenhouse gases, but there remains debate about how rapid the cuts should be. There has been an understandable, if simplistic, tendency to stylise the debate as “Stern versus Nordhaus”. In his eponymous Stern Review on the Economics of Climate Change (Stern 2007), sponsored by the UK government, Nicholas Stern advocated rapid cuts in emissions, starting immediately. He wasn’t the first economist to come to such a conclusion, but the prevailing view up to that point, epitomised – and indeed significantly informed – by William Nordhaus, was that policy should be more gradually ramped up (Nordhaus 2008).

It is commonly held that the source of disagreement between the Stern and Nordhaus camps is over how to distribute consumption over time (i.e. as embodied in the discount rate). This is intuitive, since the dynamics of the climate system dictate that emissions cuts made today at some cost do not provide a stream of benefits, by way of avoiding damage from climate change, for decades to come. Put another way, what sacrifice should be made in the years to come, for the benefit of those living several decades from now?

Uncertainty about climate change

However, attention has recently shifted to another feature of the problem – the enormous uncertainty that surrounds the consequences of continued emissions of greenhouse gases and therefore the benefits of emissions cuts. Three factors come together to produce this uncertainty.

- The first is futurity, in particular the uncertain future socio-economic trends that determine the path of emissions, as well as how numerous and well off we will be when the impacts of today’s emissions occur.

- Second, there is the considerable complexity of the climate system, not to mention its linkages with ecosystems and the economy, which means that it is hard to know whether our models are a reasonable simplification.

- Third, there is the fact that the system is non-linear. This greatly increases the significance of model misspecification.

The “climate sensitivity” example

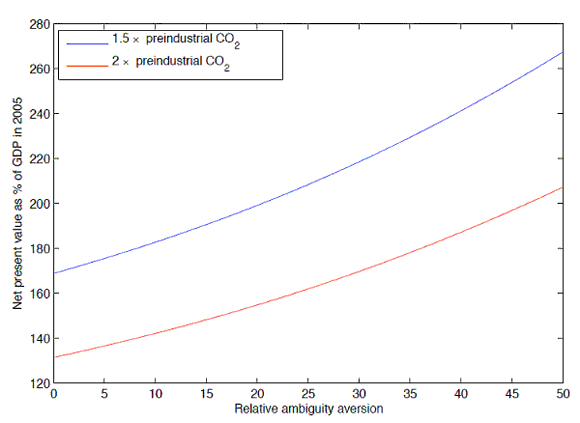

Images like Figure 1 are often held up as a leading example of this uncertainty. It plots estimates of the so-called “climate sensitivity” from 20 different studies, using a variety of models and observational datasets. The climate sensitivity is the increase in global mean temperature (in equilibrium) accompanying a doubling in the atmospheric concentration of carbon dioxide (CO2). Two aspects of Figure 1 are important.

- First, notice that, irrespective of what model is applied, the distribution is wide, and skewed to have what we might loosely call a “fat tail” of low-probability, high-temperature outcomes.

This means that any cost-benefit analysis of emissions cuts that abstracts from uncertainty by working solely with a best guess of the climate sensitivity is likely to be misleading. Stern (2007) made this point, as did Weitzman (2009).

- Second, notice the obvious fact that the various models disagree on what the distribution looks like precisely and that the spread between some sample pairs of models is wide.

This, by contrast, is not an aspect of climate-change uncertainty with which economists have entirely come to grips.

Figure 1. Estimated probability density functions for the climate sensitivity from a variety of published studies, collated by (Meinshausen et al. 2009).

(click to enlarge)

The reason is simple. The standard economic tool for evaluating the net benefits of a risky policy is expected-utility analysis – calculate the utility of the policy under various possible contingencies, where each contingency is assigned a unique, quantitative estimate of probability, and sum the result. This is the standard tool for good reason. If we know the unique probabilities of the various contingencies, then a persuasive case has been made that maximisation of expected utility constitutes the rational choice.

But what happens if we don’t know the unique probabilities of all the contingencies? Figure 1 would seem to indicate that we don’t in the context of the climate sensitivity, because even the models we have disagree with each other.

Ambiguity aversion

It is possible to characterise two different answers.

- The first might be called “Bayesian” and indicates that, where models disagree, we should nevertheless work with unique estimates of probability, but we should give these estimates the interpretation of a subjective belief.

- The second answer has its roots in the famous Ellsberg paradox (Ellsberg 1961).

One of two versions of the paradox begins with an urn containing 30 red balls and 60 black and yellow balls in unknown proportion. The decision-maker is presented with two choices between gambles. First, bet on (A) red or (B) black. Second, bet on (C) red and yellow or (D) black and yellow. In all four gambles, the prizes are the same. To satisfy the axioms of expected utility, the decision-maker should bet on A if and only if she believes that drawing a red ball is more likely than drawing a black ball.

This also implies C should be chosen over D.

But in fact most people choose A and D, which are the gambles with known probabilities, and thus they appear to prefer gambles with known probabilities over those with unknown probabilities. This has come to be known as “ambiguity aversion”.

Importantly, contrary to various other decision-theoretic paradoxes, the decision-maker often sticks to her choice in this case, even when it is pointed out to her that she has violated (one of) the axioms of expected utility (Slovic and Tversky 1974). Therefore not only does expected-utility analysis fail to predict human behaviour under ambiguity; unlike other “heuristics and biases”, a case can be made that the behaviour they do exhibit – ambiguity aversion – is the correct yardstick for normative policy choices (Gilboa et al. 2009). Thus while the debate between advocates of Bayesian subjective probability and advocates of ambiguity aversion goes on, it can certainly be argued that the latter is a legitimate approach.

Ambiguity and climate policy

In recent research (Millner et al. 2010), we extend cost-benefit analysis of climate change to consider a model of ambiguity aversion, and ask what effect ambiguity aversion has on climate policy prescriptions.

We begin by providing some analytical examples of conditions under which ambiguity aversion will lead to an increase in the value of emissions cuts. Perhaps the most important analytical result to grasp is that the more averse to ambiguity a decision-maker is, the more weight she will place on models that generate low expected utilities.

In Figure 1, this roughly means that the decision-maker puts more weight on models that estimate high global temperatures in response to CO2 emissions. Such warming will, all else equal, lead to greater damage from climate change, lower incomes, and lower utilities. Now, the benefits of emissions cuts will also be greater in such models, because greater damages will be avoided from climate change. So, the greater is ambiguity aversion, the more weight is placed on models with higher estimates of the net benefits of emissions cuts. Admittedly, to derive this simple result we assume away uncertainty about the cost of cutting emissions, but the level of uncertainty that is thought to attend the cost side is much lower than the benefits side, so we think this is a reasonable shortcut.

But how large is the ambiguity premium quantitatively, and how does it compare to other related factors?

To answer these questions we adapted the “DICE” integrated assessment model, which William Nordhaus has used for many years to perform cost-benefit analysis of various climate policy suggestions (Nordhaus 2008). We carried out cost-benefit analysis on programmes of global emissions cuts that have been floated in international policy discussions in recent years. The first seeks to limit the atmospheric concentration of CO2 to twice its pre-industrial level, and the second, more ambitious programme seeks to place the limit at one-and-a-half times the pre-industrial level. The latter programme is amongst the most ambitious on the table.

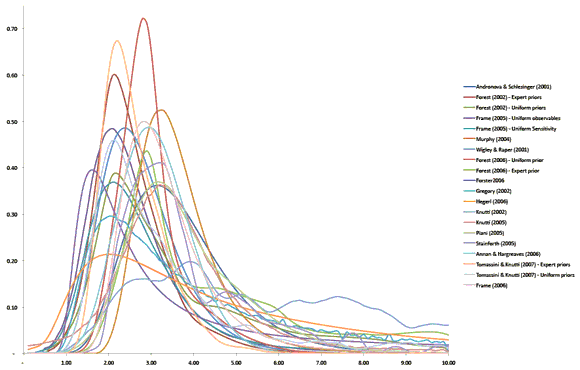

Figure 2. The net present value of greenhouse gas emissions cuts as a function of ambiguity aversion.

We find that the more ambiguity averse the policy-maker is, the more the value of the two emissions trajectories increases (Figure 2). Indeed, in a plausible scenario where very large amounts of warming trigger very large economic damages, the ambiguity premium explains the majority of the benefits of the policy and is much larger than the traditional effect of risk aversion.

We conclude that – from the point of view of climate policy – ambiguity aversion is another feather in the bow of those advocating immediate, rapid cuts in greenhouse gas emissions. From the broader point of view of decision-making under uncertainty, the substantial effect of ambiguity aversion also implies that the debate between Bayesian and non-Bayesian approaches is a vital one and should be given much more attention.

References

•Ellsberg, D (1961), “Risk, ambiguity and the Savage axiom”, Quarterly Journal of Economics, 75(4):643-669.

•Gilboa, I, A Postlewaite et al. (2009), “Is it always rational to satisfy Savage’s axioms?”, Economics and Philosophy, 25(3): 285-296.

•Meinshausen, M, N Meinshausen et al. (2009), “Greenhouse-gas emission targets for limiting global warming to 2 °C”, Nature, 458(7242):1158-1162.

•Millner, A, S Dietz et al. (2010), “Ambiguity and climate policy“, NBER Working Paper 16050.

•Nordhaus, WD (2008), A Question of Balance: Weighing the Options on Global Warming Policies, Yale University Press.

•Slovic, P and A Tversky (1974), “Who accepts Savage’s axiom?”, Behavioural Science, 19(6): 368-373.

•Stern, N (2007), The Economics of Climate Change: The Stern Review, Cambridge University Press.

•Weitzman, ML (2009), “What is the “damages function” for global warming and what difference might it make?“, Harvard University mimeo.

![]()

Leave a Reply