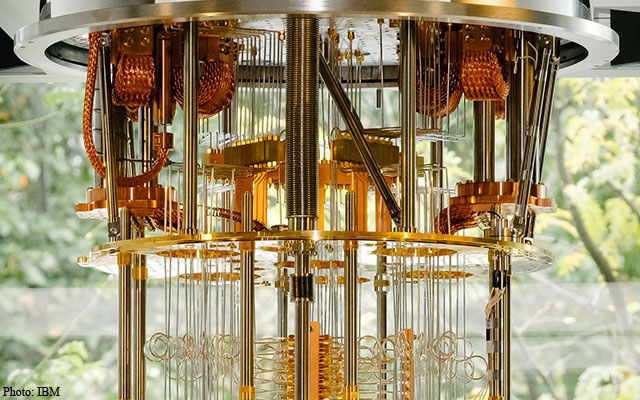

IBM’s recent breakthrough announcement on the building of an operational quantum computer prototype capable of handling 50 qubits (quantum bits), puts the New York-based tech giant on the cutting edge of quantum computing research as the newly built processor, currently seen as the most advanced quantum machine ever built, has the capacity to surpass the computational capabilities of our most powerful supercomputers, making it superior.

Using the counter-intuitive nature of quantum physics, IBM’s team of scientists managed to preserve the prototype’s quantum state for a total of 90 microseconds (ms) of coherence — a record for the industry.

“We are really proud of this; it’s a big frickin’ deal,” IBM’s artificial intelligence (AI) and quantum computing director Dario Gil, who made the Nov. 10 announcement at the IEEE Industry Summit on the Future of Computing in Washington DC, told the MIT Technology Review.

IBM’s achievement is not only a significant landmark in progress toward practical quantum computers, but also a phenomenal accomplishment that happened far quicker than any expert predicted. The company also unveiled at the IEEE Summit what it called: a 20-qubit high-fidelity quantum operations processor, featuring improvements in superconducting qubit design and connectivity. IBM said the machine’s accessibility to third-party users will be facilitated via IBM’s cloud-based computing platform.

It should be noted though (and without taking anything away here from IBM’s remarkable achievement) that while the prototypes’ (50-qubits) quantum state was maintained for a record of 90 ms, the time period is still extremely short. The net result is due to a persisting problem with ‘noisy’ data that gets compounded by the fact that as the qubits scale exponentially, so do their computational error-tendencies, including issues with how well connected that data is. Needless to say, quantum computing is not ready yet for common use, unless a zero-error-tolerance capability gets developed.

To better understand why qubits become susceptible to errors when the system tries to add more processing power, one should consider the fact that this new form of advanced computing performs quite differently compared to traditional computers.

Unlike today’s computers that process information by using binary bits that can only take on the form of either zeros and 1s, a quantum machine, by adapting quantum effects known as ‘entanglement‘ and ‘superposition’ — fundamental principles of quantum mechanics that take place when two photons or other quantum bodies behave as a single unit even if they are spatially separate — uses units of quantum information (qubits) which can simultaneously be a 0 and/or a 1 in weighted combinations. To be more specific, while at a superposition state one qubit can perform two calculations, two qubits can perform four calculations by representing four values simultaneously: 00, 01, 10, and 11. Similarly, three qubits can perform calculations representing eight values simultaneously, and so on with the sky basically being the limit.

Now we have to keep in mind, and as IBM researcher Edwin Pednault notes — whose “bristle brush” moment by the way, became the basis of a fault-tolerance theory when it comes to addressing the system’s ‘noisy’ data aspect which made the 50-qubit quantum computer prototype possible: “fifty qubits can represent over one quadrillion values simultaneously, and 100 qubits over one quadrillion squared.”

We are already talking about great computational power here, a fact that gives IBM not only the basis of their future quantum computers but also processing capabilities that would have otherwise been seen as extremely difficult, if not impossible to simulate without quantum technology.

As one fully recognizes that quantum computing is still in its infancy phase, the above-mentioned fact alone classifies IBM’s prototype as a leap in computational capability given the achievement brings us one step closer to a future where quantum technology will not only be able to accomplish tasks beyond the scope of present-day computers, but also help us solve, whether it is through nanotechnology, physics and chemistry research, many of the world’s most difficult and long-standing problems.

IBM first launched a working quantum computing in May 2016. Dubbed the IBM Quantum Experience, the 5 and 15 qubit systems, made available for the first time ever by the company for public access, marked the “beginning of the quantum age of computing and the latest advance from IBM towards building a universal quantum computer.”

IBM’s Gil said: “These latest advances show that we are quickly making quantum systems and tools available that could offer an advantage for tackling problems outside the realm of classical machines.”

Disclaimer: This page contains affiliate links. If you choose to make a purchase after clicking a link, we may receive a commission at no additional cost to you. Thank you for your support!

The notation for microseconds is μs not ms, ms is milliseconds.