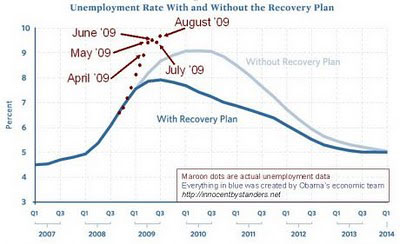

Nobel prize winner Paul Krugman may say it’s econ 101 to have the federal government spend more in recessions, and I agree, but it’s also wrong. The highly rigorous yet ultimately naively simplistic macro models have not been useful in rectifying this incorrect intuition, which remains dominant. Gone are the days like in 1920, when during a nasty recession, the federal government cut expenditure by half from 1920 to 1922, and the economy rebounded nicely. Historically, whenever we are in a recession, economists generally call for more fiscal spending, all the better if it is deficit financed. Yet as Milton Friedman noted, “The fascinating thing to me is that the widespread faith in the potency of fiscal policy … rests on no evidence whatsoever.” Evidence no, theory, yes (currently, unemployment is 9.7%)

Nobel prize winner Paul Krugman may say it’s econ 101 to have the federal government spend more in recessions, and I agree, but it’s also wrong. The highly rigorous yet ultimately naively simplistic macro models have not been useful in rectifying this incorrect intuition, which remains dominant. Gone are the days like in 1920, when during a nasty recession, the federal government cut expenditure by half from 1920 to 1922, and the economy rebounded nicely. Historically, whenever we are in a recession, economists generally call for more fiscal spending, all the better if it is deficit financed. Yet as Milton Friedman noted, “The fascinating thing to me is that the widespread faith in the potency of fiscal policy … rests on no evidence whatsoever.” Evidence no, theory, yes (currently, unemployment is 9.7%)

You can create neat models where you have consumers maximizing their utility over time, and producers maximizing profits, and if you made some very heroic assumptions, you can anthropomorphize this situation as one single individual, supposedly represents millions of people acting this way. Add some assumptions about time series shocks to productivity and tastes, and you can then calibrate the interesting variables(output, investment), and say “I have a viable model of the macro-economy”.

This always seemed a bit strained, but it highlights a lot of models I see, where one evaluates a model based on it being able to generate output with similar ‘moments’: means, variances, covariances. Correlation does not imply causation, but it’s dirty little secret that probably 90+% of actual science in practice involves mere correlations with ex post theorizing. I remember most of my fellow grad-school classmates thought these models were rather naive, as if anthropomorphizing the economy as a single person explains growth rates and business cycles, but over time, yet those who stayed with the program got sucked in like the way individuals are absorbed into the Borg collective, and the rest of us, most of us, decided to chuck macro entirely.

Many scientists spend their life on these models, where an intuitive story motivates an algorithm–ideally one with maximizing behavior by decision makers and a supply=demand condition–that is then jerry-rigged to fit the output. It’s very easy given the many degrees of freedom, to generate models that seem to work really well because it can create time series that when you eyeball them, looks like a desired time series (this seems to encourage a lot of econophysics efforts). This is a really bad way to evaluate theories, because it’s too hard to falsify, and too easy to convince yourself the model works.

Note how totally irrelevant the representative agent model was to the latest recession. No one thinks some mechanism, highlighted by the representative agent framewor, was really important. To some extent, the low interest rates of 2002-5 were relevant, and this effect falls within the representative agent models, but I don’t think this was really essential part of the crisis, and the representative agent models don’t make this connection clearer or more measurable.

There have been several Nobel prizes in this modeling dimension (Lucas, Kydland, Prescott), and I think they were valiant efforts, but it’s important to recognize failures and move on, because wasting time on a dead end only helps aging researchers who are incapable of developing a new toolset maintain their citation counts. I’m rather unimpressed by the common tactic of using citations to validate research: suggestive, but hardly definitive. Unfortunately, bad ideas are rarely rejected but rather orphaned, ignored by young researchers. After all, proving something is wrong is hard, especially if that something is not so much a theory (the representative agent model), but rather a framework. It’s best to look at the gestalt and say, I’m just not going there, and leave it at that. So, the models of risk and expected return persist because they are intuitive on some level, and can generate results that seem to match broad patterns. They merely can’t predict, nor do they underly actually useful fiscal policy.

The worst thing is the focus upon mathematics can allow a researcher to avoid these problems, because there’s always the hope that even if one’s model dies, the mathematical techniques used in creating it will live on. It allows one to revel in rigor, and dismiss any particular model’s empirical failures. Many economists I think dream of being like string theorist Ed Witten: Fields Medal winner and creator of understanding an applied subject (not coincidentally, an untestable theory).

The insiders will say, you’re just a player-hater and don’t understand let alone appreciate the math. It’s true critics will not understand the tools as well as the true believers, because if you think it’s a waste of time, you aren’t going to get really good at these tools. So the bottom line is, do they generate useful predictions? Do large banks hire top-level macroeconomists? No and no. Hard core macroeconomic theory has been pretty irrelevant to the latest recession, as it always has been.

For example, the stimulus bill was debated by famous economists Paul Krugman (trade theorist), who argued for government spending money on anything, and Robert Barro (macro theorist) saying the government should cut taxes. Robert Barro may be a full-time macroeconomist who imposes more of the representative agent logic on his theories, but his advice is considered as relevant as the musings of Paul Krugman, who has never done actual macroeconomics at the peer-reviewed level (in the words of Barro, ‘His work is in trade stuff. He did excellent work, but it has nothing to do with what he’s writing about.’) But that’s the result of having a framework that explains everything and nothing, not even economists feel constrained by it. They know, that even though it’s not their full time gig, it’s no constraint on their opinions, it’s not like some macroeconomist will embarrass them by pointing out a flaw in their logic. Such theory can justify any standard Keynesian, Monetarist, or Laissez-fair prejudice, making it irrelevant.

Disclaimer: This page contains affiliate links. If you choose to make a purchase after clicking a link, we may receive a commission at no additional cost to you. Thank you for your support!

Leave a Reply